These rule lists have a logical construction, much like determination lists or one-sided determination timber, consisting of a sequence of IF-THEN guidelines. On a global stage, it identifies determination guidelines that apply to the complete dataset, providing insights into general mannequin behavior. On a neighborhood degree, it generates rule lists for specific situations or subsets of knowledge, enabling interpretable explanations at a more granular degree. SBRL offers flexibility in understanding the model’s behavior and promotes transparency and belief Explainable AI.

Challenges On The Greatest Way To Explainable Ai

It focuses on offering detailed explanations at an area level quite than globally. Only on a worldwide scale can ALE be utilized, and it offers a radical image of how each attribute and the model’s predictions connect all through the entire dataset. It does not provide localized or individualized explanations for particular situations or observations within the information. ALE’s energy lies in offering complete insights into characteristic effects on a worldwide scale, serving to analysts identify necessary variables and their influence on the model’s output. In machine learning, a “black box” refers to a model or algorithm that produces outputs with out providing clear insights into how those outputs were derived. It essentially signifies that the internal workings of the model are not easily interpretable or explainable to people.

- ML fashions are often thought of as black packing containers which would possibly be unimaginable to interpret.² Neural networks used in deep learning are a few of the hardest for a human to understand.

- Explainability also helps ensure that AI is ethical, providing a bonus within the face of technical or authorized challenges.

- It features largely as a visualization device, and may visualize the output of a machine studying mannequin to make it extra understandable.

- With a market forecast of $21 billion by 2030, explainable AI technology might be pivotal to bringing transparency to the machinations of computer minds.

- This implies that an enormous array of clarification execution or integration strategies might exist inside a system.

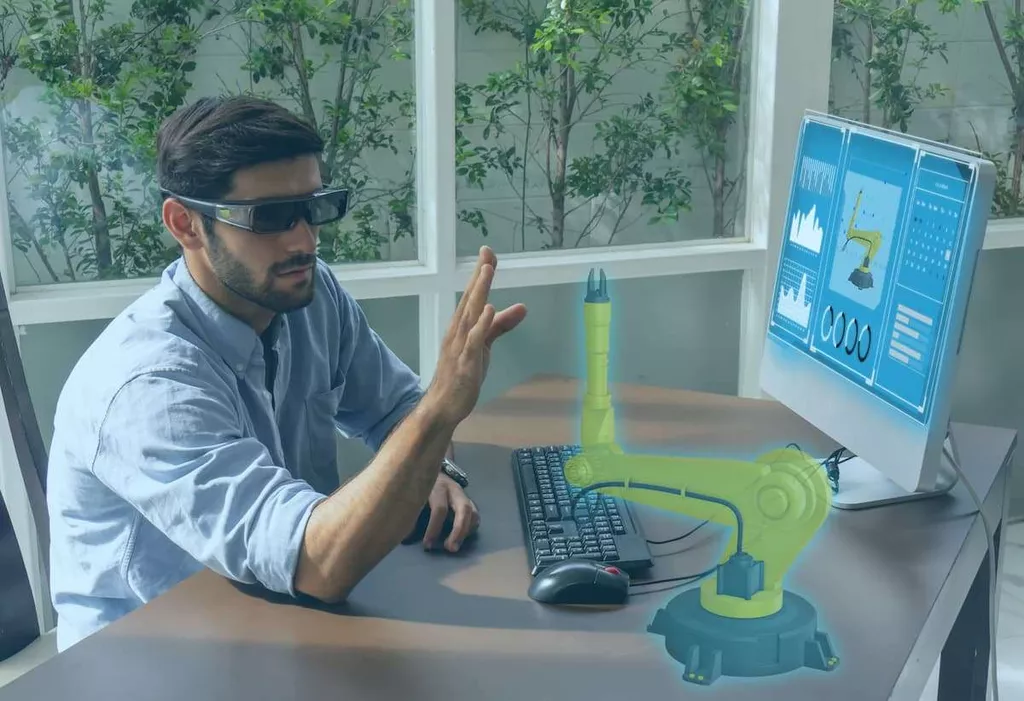

- Visualization tools improve understanding by providing graphical representations of AI decision processes.

Explainable Ai In Manufacturing

In another example, there are biases to implementing XAI within the judicial system. There are bodies of analysis that show biases towards ethnic teams in decision-making with explainable XAI. For example, a random forest model might present better predictive efficiency for a financial forecasting task than a choice tree, however the decision tree provides extra simple explanations. For instance, making use of SHAP values to a large-scale deep studying mannequin requires significant computational assets and machine studying and cooperative sport concept experience. Organizations may lack the technical infrastructure and skilled personnel to effectively implement and keep XAI methods. Developing and integrating explanation strategies, maintaining mannequin performance, and ensuring scalability may be technically challenging.

An Ai System Should Present Evidence Or Causes For All Its Outputs

AI helps judges make choices about sentences and assists surgeons throughout operations. It is the accountability of software program engineers to be certain that AI clearly describes how it arrived at certain conclusions. If they arrive from erroneous premises then the customers can’t actually benefit from them or trust them. Overall, explainable AI helps to advertise accuracy, equity, and transparency in your organizations. Whatever the given rationalization is, it has to be meaningful and provided in a way that the meant users can understand. If there’s a range of users with diverse data and ability sets, the system ought to present a spread of explanations to fulfill the wants of these users.

To enhance interpretability, AI systems usually incorporate visible aids and narrative explanations. For occasion, the tools of interpretability can enable provide chain managers to know why a sure provider is recommended and thus make better decisions. In an age where industries are more and more being influenced by artificial intelligence, openness and belief in such methods are important. As medical operations get increasingly more sophisticated, XAI performs an important role in guaranteeing the reliability of even the tiniest details offered by AI models. The Knowledge Limits precept highlights the importance of AI methods recognizing conditions where they weren’t designed or authorized to operate or the place their answer could additionally be unreliable. If you have an idea of an explainable AI answer to construct, or if you’re still unsure of how explainable your software program needs to be, consult our XAI experts.

With all of that in thoughts, it’s essential for stakeholders to grasp AI decision-making processes. Transparency in knowledge gathering involves informing users about what information the AI system collects, why it collects this knowledge, and how it makes use of this knowledge. This is important for respecting consumer privateness and for constructing belief in the AI system. This might contain designing the AI system to learn from its mistakes, or providing customers with the option to correct the AI system when it makes an error.

Although the model is able to mimicking human language, it additionally internalized a lot of poisonous content from the web during coaching. Decisions about hiring and monetary companies use case corresponding to credit scores and mortgage approvals are essential and worth explaining. However, no one is more likely to be physically harmed (at least not proper away) if a sort of algorithms makes a nasty recommendation. This contains the method of stress testing models on edge instances and their anomalies to ensure that they can handle any unexpected inputs. Explainable AI (XAI) addresses these concerns by making the inside workings of AI applications comprehensible and transparent.

For occasion, if an AI system is used for language translation, it should flag sentences or words it can’t translate with high confidence, somewhat than providing a misleading or incorrect translation. Beyond the technical measures, aligning AI systems with regulatory requirements of transparency and equity contribute greatly to XAI. The alignment isn’t merely a matter of compliance however a step towards fostering trust. AI fashions that demonstrate adherence to regulatory principles via their design and operation usually tend to be thought of explainable. Feature importance evaluation is one such technique, dissecting the influence of each input variable on the mannequin’s predictions, very like a biologist would research the influence of environmental factors on an ecosystem.

This increases belief within the system and allows customers to challenge selections they imagine are incorrect. Simplify the method of mannequin evaluation whereas rising mannequin transparency and traceability. Prediction accuracyAccuracy is a key part of how successful the utilization of AI is in on an everyday basis operation.

The key distinction is that explainable AI strives to make the inside workings of these sophisticated fashions accessible and comprehensible to people. As these intelligent methods become more subtle, the chance of operating them with out oversight or understanding increases. By incorporating explainable constructions into these systems, developers, regulators, and customers are afforded a chance for recourse within the event of erroneous or biased outcomes.

This has led to many wanting AI to be more clear with the means it’s operating on a day-to-day foundation. Excella AI Engineer, Melisa Bardhi, be part of host John Gilroy of Federal Tech Podcast to examine how synthetic intelligence… For instance, a recommender system gives a cause for the given advice to the proprietor. LLMOps, or Large Language Model Operations, encompass the practices, strategies, and tools used to deploy, monitor, and maintain LLMs successfully.

Deep studying algorithms are increasingly essential in healthcare use instances similar to cancer screening, the place it’s essential for medical doctors to understand the basis for an algorithm’s analysis. A false adverse might imply that a patient doesn’t obtain life-saving treatment. A false optimistic, on the other hand, may lead to a patient receiving expensive and invasive remedy when it’s not necessary. A degree of explainability is essential for radiologists and oncologists seeking to take full benefit the rising benefits of AI.

According to a recent survey, 81% of business leaders believe that explainable AI is necessary for their group. Another examine discovered that 90% of shoppers are extra doubtless to belief a company that makes use of explainable AI. These statistics spotlight the importance of XAI in today’s world, the place AI is turning into more and more prevalent in different domains and purposes. To find out how NetApp may help you deliver the info administration and information governance that are essential to explainable AI, go to netapp.com/artificial-intelligence/. However, given the mountains of data that could be used to train an AI algorithm, “attainable” just isn’t as simple as it sounds.

Note that the standard of the explanation, whether or not it’s correct, informative, or straightforward to know, is not explicitly measured by this precept. These elements are parts of the significant and explanation accuracy ideas, which we’ll discover in additional detail below. This is where XAI comes in handy, offering transparent reasoning behind AI decisions, fostering trust, and encouraging the adoption of AI-driven options. For occasion, the European Union’s General Data Protection Regulation (GDPR) gives individuals the “right to explanation”. This means individuals have the right to understand how selections affecting them are being reached, together with those made by AI. Hence, companies utilizing AI in these regions need to make sure AI systems can present clear explanations for his or her decisions.

Transform Your Business With AI Software Development Solutions https://www.globalcloudteam.com/ — be successful, be the first!